From poor data foundations to unclear objectives, most AI initiatives collapse long before they deliver value. Across our AI Readiness Masterclasses in Dubai and Ras Al Khaimah, the data shows a consistent trend: MENA organisations are adopting AI tools tactically (mainly for content generation), but their foundational data practices, governance maturity, and integration readiness remain insufficient for scalable AI deployment.

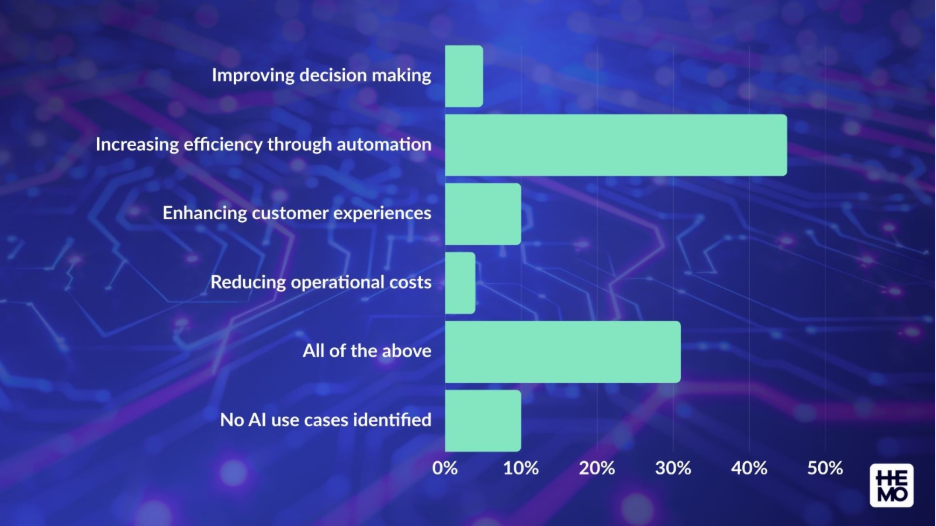

1. Expected Business Value of AI

Across our AI Readiness Masterclasses, most participants framed AI value in operational terms, not strategic ones. The strongest expectations centred on efficiency gains: automating repetitive work, reducing manual effort, and speeding up existing processes.

AI is viewed primarily as a workflow-automation tool, not a strategic driver of transformation.

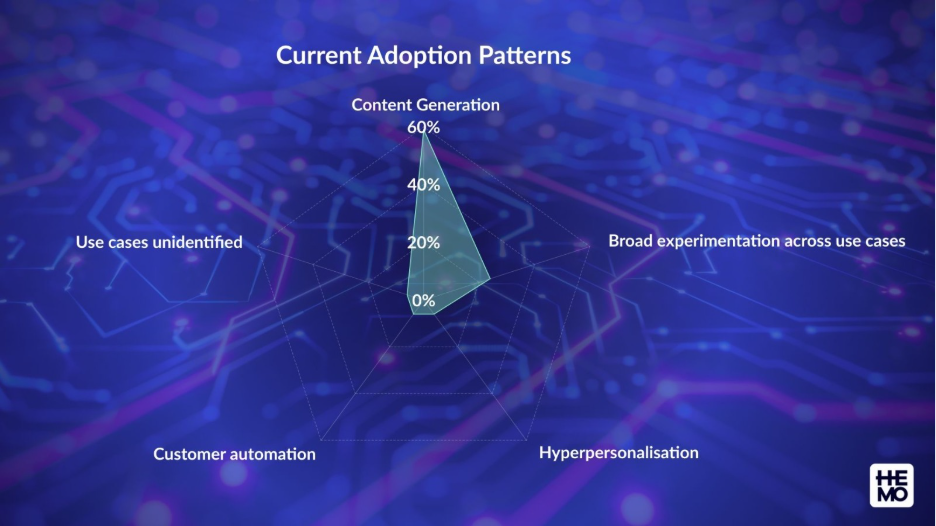

2. Current Adoption Patterns

Most organisations are experimenting at the interface layer, using generative tools for content creation, summaries, or basic automation. These tools are easy to adopt, sit outside core systems, and require minimal data integration.

Far fewer teams are applying AI to customer intelligence, personalisation, or operational decisioning, where the real value lies. These use cases demand clean data, consistent definitions, and integrated pipelines

Adoption is “top layer” (application-level generative tools), not structural (data, pipelines, integration).

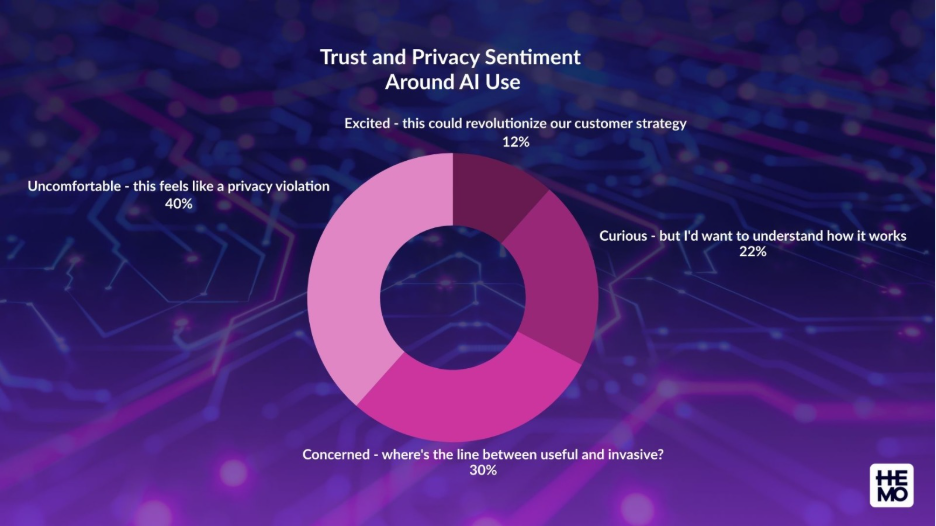

3. Trust and Privacy Perception

When asked about using an AI product fed with behavioural and personal data, attendees responded with their feelings:

Trust and privacy are the strongest inhibitors of internal AI deployment.

4. Key Takeaways From Participants

Across all sessions, the most frequently surfaced terms were:

privacy, data quality, governance, planning, ethics, readiness, integration

Teams understand the dependencies conceptually but lack frameworks to operationalise them.

The Gap Between AI Hype vs. AI Reality

Every organisation wants to “adopt AI,” but very few are set up to make it work. Don’t take our word for it, here are the stats:

- According to a 2025 survey by Gartner, 63% of organisations said they do not have, or aren’t sure they have, proper data-management practices to support AI. Gartner

- The same survey warns that organisations without “AI-ready data” are likely to abandon 60% of their AI projects by 2026.

Here’s what that means in practice:

Poor or Incomplete Data Foundations

Data exists in silos across different tools, departments and functions with:

- No unified model

- Conflicting definitions

- Missing lineage

- Inconsistent timestamps

- No single source of truth

AI cannot produce stable outputs when the data feeding it isn’t stable.

How this impacts your business: When teams can’t agree on the same data, decisions slow down, trust erodes, and AI outputs get challenged instead of acted on.

Weak Governance and Undefined Data Ownership

AI requires predictable data contracts and strict ownership. Without:

- Data owners (accountable for definitions)

- Stewards (ensuring operational hygiene)

- Access controls

- Audit logs

- Retention and consent policies

…you end up with unmanaged data feeding an unmanaged system.

Why this is bad for your business? When something goes wrong, no one owns the problem which leads to issues lingering, risks compounding, and problems surfacing only when they become expensive.

No Monitoring or Observability

Most companies lack:

- Freshness checks

What it means: A system that automatically checks whether your data is up-to-date.

In simpler terms: Imagine trying to make business decisions using last week’s sales, but thinking it’s today’s numbers. That’s what happens when you don’t check data freshness.

Why it matters for AI: If the model is trained on stale data → the predictions will be stale too.

AI outputs are only as current as the data it sees. If decisions are made using outdated information, it can lead to missed opportunities and late reactions to the market or customer changes.

- Schema-change alerts

What it means: A way to detect if someone added, removed, or renamed fields in your data tables.

In simpler terms: It’s like rearranging someone’s kitchen without telling them…suddenly the spoons aren’t where they’re expected, and everyone panics.

Why it matters for AI: If column names or formats change, your pipelines break silently. Models may:

- misinterpret features

- produce errors

- or generate completely wrong outputs

This is one of the most common reasons AI pilots fail.

- Backfill processes

What it means: A process that fills in historical gaps when data ingestion fails.

In simpler terms: Imagine your CCTV keeps recording, but the feed to your monitoring system drops for six hours. A backfill simply pulls in the footage that already existed once things are back online, instead of pretending those hours never happened.

Gaps in data reduce confidence in trends and forecasts, making planning cycles less reliable and harder to defend.

- Data-quality thresholds

What it means: Rules that define how “clean” or “complete” your data must be before it’s allowed into downstream systems.

In simpler terms: Think of it like airport security for your data: dirty, broken, or suspicious records don’t get through.

Why it matters for AI: Poor-quality data leads to:

- hallucinations

- inconsistent recommendations

- failed evaluations

This is why engineering teams spend so much time on cleanup. More time is spent fixing data issues than improving products, slowing execution and increasing operational costs.

- Low Integration Readiness

AI needs real-time pipelines, well-documented APIs, and interoperable systems.

Many organisations still run on:

- Batch exports

- CSV uploads

- Hard-coded logic

- Legacy ERPs

You cannot build real-time intelligence on top of manual processes.

What the 5% Do Differently

Forget complicated frameworks. If you strip away the buzzwords, AI-readiness comes down to four things: clean data, solid systems, clear rules, and teams that know what to do with them.

Data Readiness

AI can’t work if the data underneath it is broken. An AI-ready organisation has:

- One version of the truth: Everyone uses the same definitions for customer, order, revenue, churn and no competing dashboards.

- Data that is updated automatically: No manual exports. No “please refresh this report.” Data flows into systems in near real time.

- A clear map of where data lives: You know which tools store what, who owns them, and how data moves between them.

- Quality checks running in the background: Someone catches issues before your team does, including duplicates, missing values, and broken events.

System Readiness

You essentially need systems that talk to each other.

- Tools that integrate easily: Your CRM, CDP, analytics, and internal databases can pass data back and forth without hacks.

- The ability to plug new AI tools in without chaos: No brittle spreadsheets or one-person-only workflows.

- Monitoring in place: When things break (pipelines, APIs, ingestion), you’re notified before the business feels it.

- Secure access and permissions: Teams only access the data they need.

In simple terms: You have a clear infrastructure in place like roads and traffic lights and not just cars.

Governance Readiness

AI introduces risk. An AI-ready organisation has rules that guide how data and models are used.

- Consent and privacy are embedded in processes: Opt-ins, cookies, permissions, all handled properly and not just considered a tickbox exercise.

- Clear ownership: Someone / a team is accountable for data quality, approvals, and handling exceptions.

- Policies that people can actually follow: Not 98-slide decks. Actionable guidance:

- what data can be used

- when it can be used

- where is it

- who needs to approve it

4. Ability to review and audit decisions: You can see what the AI recommended, what data it used, and whether it created risk.

Team Readiness

AI doesn’t replace people, it supplements their time and workload. Here’s how to make the best use out of AI systems.

- Defined AI use cases: Everyone knows what problems AI is solving and why.

- Cross-functional collaboration: Product, marketing, data, engineering, and compliance work together instead of in silos.

- Training and onboarding: Teams understand where AI helps, where it doesn’t, and how to check outputs.

- Performance metrics that include AI impact

The difference between the 95% that fail and the 5% that scale AI is simple:

They fix the foundations before they deploy the technology.

Once your data is unified, governed, and observable, AI stops being a risk and starts becoming a multiplier.

If you’re thinking about scaling AI in 2026, start with the parts no one sees: Clean data, connected systems, and aligned teams are not “nice-to-haves”…they are the non-negotiables for safe, useful AI.

At HEMOdata, we help organisations in the UAE and Saudi Arabia assess their readiness using real frameworks: from data quality and governance to integration and responsible AI. These will be covered in our next few blogs, so stay tuned.

If you want to understand where you stand today (and what to fix first), we’re here to help.